Can disclosure of scoring rubric for basic clinical skills improve objective structured clinical examination?

Article information

Abstract

Purpose:

To determine whether disclosure of scoring rubric for objective basic clinical skills can improve the scores on the objective structured clinical examination (OSCE) in medical students.

Methods:

Clinical performance score results of one university medical students (study group, n=345) were compared to those of another university (control group, n=1,847). Both groups took identical OSCE exam. OSCE rubric was not revealed to the study group until they were in the last 2 years of medical school.

Results:

There was no significant difference between before and after disclosure of rubric. However, history taking and physical examination scores of the study group were lower than those of the control group before the disclosure of rubric. After disclosure of rubric, the scores were either unchanged or slightly increased in the control group. Trend analysis of scores demonstrated that history taking and physical examination scores after the disclosure were significantly increased in the study group for 2 years.

Conclusion:

This study revealed that disclosure of basic clinical skills rubric to medical students could enhance their clinical performance, particularly in history taking and physical examination scores.

Introduction

Medical education largely consists of basic research on reasoning, problem based learning, performance assessment, and continuing education [1]. Performance assessment plays a particularly determinant role in changing established paradigms of medical education, thus contributing significantly to the promotion of prompt interest and motivation for medical students in their learning processes [2,3].

Objective structured clinical examination (OSCE) introduced by Harden et al. [4] has been the “gold standard for clinical performance assessment.” Well-designed OSCE especially draws out study motivation, consequently strengthening educational efficacy [5]. However, whether a specific education method affords a positive effect on the recipient such as OSCE remains unclear. It has been proposed that lengthy training programs can enhance OSCE scores [6], while others have suggested that analytic reasoning can induce higher diagnosis accuracy among novice doctors [7]. Another opinion is that whole-task OSCE based on hypothesis-driven physical examination heightens diagnostic reasoning compared to OSCE focusing on a standard patient [8]. Therefore, the purpose of this study was to determine whether disclosure of basic rubric clinical skills could affect performance assessment in medical students.

Subjects and methods

The clinical performance scores of Ajou University medical students (study group, n=345) were compared to those of another university (control group, n=1,847) of 2 years before and after basic clinical skills guideline disclosure (2011–2014). All students took the exam when they were fourth year medical students. Rubric scores were not revealed to the study group until they were in the last 2 years of medical school. All students who took the exam were fourth year medical students who answered OSCE developed by Seoul Gyeonggi Clinical Performance Examination (CPX) Consortium. Analysis of overall assessment as well as detailed evaluation (history taking, physical examination, physician cordiality, patient education, and physician/patient relationships) was performed for the two groups of students and their results were compared to each other. Yearly change trends in the study group (perfect score of 100) were analyzed. SPSS version 12.0 (SPSS Inc., Chicago, USA) was used for statistical data analysis using t-test. Statistically significance was considered when p-value was less than 0.05.

Results

There was no significant (p>0.05) difference in overall assessment before or after disclosure of basic clinical skills rubrics between the two groups. However, in 2011 (which was before disclosure of the rubrics), history taking scores (61.13±6.70 vs. 66.15±7.47, p=0.000) and physical examination scores (49.39±11.08 vs. 52.60±11.11, p=0.012) in the study group were significantly lower than those of the control group. In 2012, only physical examination scores in the study group were lower than those of the control group (45.38±10.57 vs. 50.25±9.51, p=0.000).

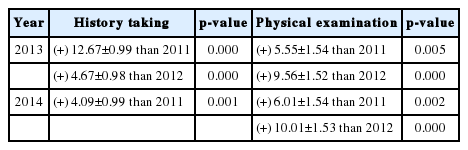

In 2013 and 2014, the 2 years after the disclosure of rubrics, there was no significant (p>0.05) difference in academic scores between the two groups. History taking (73.81±5.92 vs. 72.67±6.29, p=0.127; 65.22±6.67 vs. 66.24±7.23, p=0.241) and physical examination scores (54.94±8.16 vs. 55.61±10.01, p=0.557; 55.39±10.18 vs. 54.05±8.87, p=0.228) in the control group were either unchanged or slightly increased. Trend analysis of each year’s scores demonstrated that history taking and physical examination scores in the study group were significantly increased after the disclosure for 2 years. History taking scores in 2013 were increased by 4.68 to 12.68 points compared to those before the disclosure of rubrics. Similarly, physical examination scores were increased by 5.55 to 9.56 points in 2013 compared to those prior to 2012. They were further increased by 6.01 to 10.01 points in 2014, the second year after the disclosure (Tables 1, 2).

Comparison of Grade Point Average between Subjects before and after Disclosure of Clinical Skills Guidelines

Discussion

Most of the previous evaluation on the performance assessment have focused on developing detailed scenario and more objective scoring checklist. To our knowledge, this is the first study to evaluate the relationship between the disclosure of OSCE rubrics and the scores obtained on OSCE examination.

This study revealed that prior disclosure is not better than the control since two arms experimental design is stronger than one arm repeated measure analysis. There was no significant difference in overall assessment before or after disclosure of the rubrics between the experimental and the control group.

However, disclosure of basic clinical skill rubrics to medical students could enhance their clinical performance scores, particularly in history taking and physical examinations.

Despite the high cost of OSCE programs, many teaching institutions in developed countries still include this program in medical education and medical licensing examination procedures because OSCE is considered as the gold standard in clinical assessment [9]. Recent trends towards outcome-based education in medical education have placed OSCE in a more significant place. Further trial and error investigations are needed to determine which medical education method is the best in promoting clinical performance. In the Republic of Korea, OSCE has been included in the national medical licensing examinations since 2009. Before that, there was considerable debate regarding the practicability and educational effectiveness among medical school professors [10]. Even if it is proven to be effective, there is a paucity of understanding of these educational programs, leading to classroom instructions based on traditional lecture formats.

With these ideas as a basis, our teaching faculty formulated an educational program for clinical skills that could be provided and disclosed prior to examination objective rubrics. It was used to instruct only the essential and necessary materials within a set period of time.

This program rubric created and developed by our institution comprised of 41 clinical skill items included in the national medical licensure exams. They were drawn up by faculty members in their respective professional disciplines. Thereafter, all rubrics were scrutinized by a secondary faculty member whose academic field had no relationship with the topic being inspected so that the guideline could be provided in a succinct and clear context to students. Although these rubric formulation processes were arduous and time consuming, we found profound change in both our faculty members and students. As this rubric could be used to assess faculty colleagues, many teachers were instructing students on such a high professional level that some teachers were receiving unfavorable feedbacks from students for their lectures. Therefore, objective teaching assessment was direly required. These students were thus provided accurate clinical skill rubrics that could change previously difficult lectures into a comprehensive program that could be rehearsed in real practical and clinical settings. Consequently, these students obtained self-confidence. These disclosed rubrics resulted in better history taking and physical examination scores.

Although we were able to collect many student test scores from CPX consortium data, our data did not include the demographic details of the subjects. Further evaluation may be necessary to evaluate any effect of the demographics on the exam scores.

For outcome-based learning to be successful, assessment changes must be ensued. To establish assessment changes, the teaching and learning processes must also change. We were able to observe and experience positive student clinical performance after implementing changes in our teaching programs. More active student participation in future education programs is needed to develop faculty teaching methods.

Acknowledgements

None.

Notes

Funding

None.

Conflicts of interest

None.